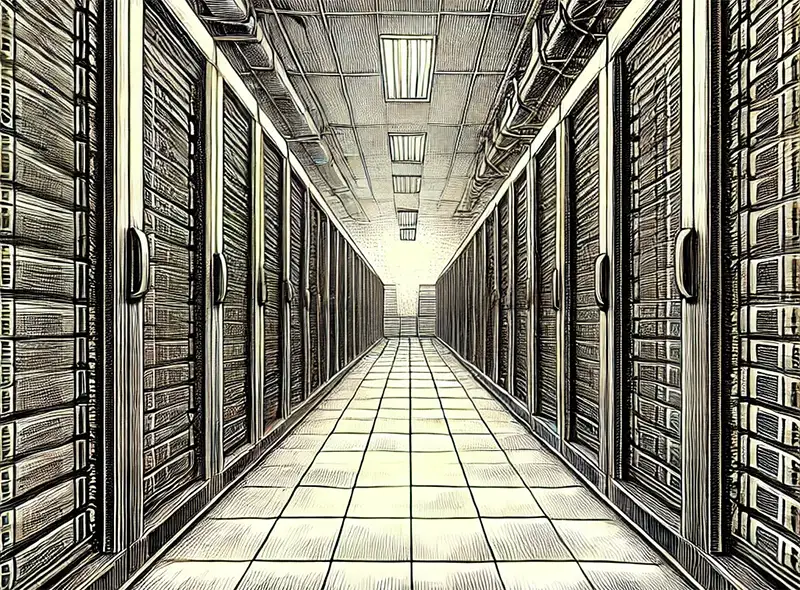

In today’s cloud-centric world, hyperscalers such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud dominate the enterprise computing landscape. The infrastructure that powers these platforms is massive and complex—requiring cutting-edge hardware capable of handling enormous workloads, including increasingly sophisticated AI and machine learning tasks. Below, we explore some of the key hardware providers that supply the essential components to these giant data centers, along with critical insights into how they contribute to the broader hyperscaler ecosystem.

1. Nvidia (Ticker: NVDA)

What They Provide:

- Graphics Processing Units (GPUs): The go-to for high-performance AI training and inference.

- Networking & DPUs: Gained from the Mellanox acquisition, offering data center networking and data processing units.

Why It Matters:

Nvidia has established itself as the gold standard for AI-related hardware, providing GPUs that hyperscalers rely on for everything from natural language processing to computer vision. Their hardware is often at the core of AI clusters powering services like image recognition, recommendation systems, and large language models.

2. Advanced Micro Devices (AMD)

What They Provide:

- Server CPUs (EPYC): High-performance, cost-efficient CPUs for data center and cloud environments.

- GPUs (Radeon Instinct / MI Series): Used for AI and high-performance computing.

- FPGAs (Xilinx): After acquiring Xilinx, AMD expanded its portfolio to include Field-Programmable Gate Arrays for specialized AI tasks.

Why It Matters:

AMD has made significant inroads into data center CPU markets that were once dominated by Intel. Its EPYC line delivers strong performance, and with the Xilinx acquisition, AMD is well-positioned for emerging AI, networking, and edge-computing demands.

3. Intel (INTC)

What They Provide:

- Data Center CPUs (Xeon): Historically the backbone of servers in hyperscale data centers.

- AI Accelerators (Habana Labs): Specialized processors for training and inference.

- FPGAs (Altera): For custom AI workflows and hardware acceleration.

Why It Matters:

While Intel faces growing competition, it remains a major supplier to hyperscalers around the globe. Intel continues to invest heavily in AI accelerators and specialized silicon to maintain its status as a critical hardware player in cloud environments.

4. Broadcom (AVGO)

What They Provide:

- Semiconductors for Networking: Switch ASICs (Tomahawk, Trident) and custom solutions for data center interconnects.

- Storage & Custom Silicon: Controllers and SoCs powering enterprise and cloud storage systems.

Why It Matters:

Networking is the lifeblood of a data center, and Broadcom’s switch chips are found in the top-tier networking hardware used by hyperscalers. Reliability and high throughput are essential in these massive computing environments, making Broadcom indispensable.

5. Marvell Technology (MRVL)

What They Provide:

- Networking & Storage Controllers: Ethernet, Fibre Channel, and custom SoCs that handle data center traffic.

- Data Processing Units (DPUs): Offload and accelerate specialized compute workloads.

Why It Matters:

Marvell’s focus on high-speed connectivity and custom-designed SoCs aligns perfectly with hyperscaler demands for performance and efficiency. As data center networks continue to evolve, Marvell’s solutions help optimize throughput for AI workloads and big data analytics.

6. Micron Technology (MU)

What They Provide:

- Memory Solutions: DRAM, NAND flash, and high-bandwidth memory (HBM) for AI and HPC.

- Storage: SSDs that leverage NAND flash for enterprise-grade performance.

Why It Matters:

AI workloads are incredibly memory-intensive. Fast, high-capacity DRAM and advanced memory modules (like HBM) are critical for keeping GPUs and CPUs fed with data. Hyperscalers consume enormous volumes of memory, making Micron a key supplier.

7. Super Micro Computer (SMCI)

What They Provide:

- Server Hardware: High-density, customizable servers optimized for AI and HPC.

- Storage & Networking Appliances: Built for data center deployments.

Why It Matters:

While not as large as traditional OEMs like Dell or HPE, Super Micro has carved out a strong niche in purpose-built hardware. Its modular designs can incorporate multiple GPUs or specialized accelerators, making them attractive for hyperscalers building next-gen AI clusters.

8. Arista Networks (ANET)

What They Provide:

- Networking Switches & Routers: Designed for hyperscale, leaf-spine architectures.

- Cloud Networking Software: EOS (Extensible Operating System) for efficient data center management.

Why It Matters:

Arista’s low-latency, high-bandwidth networking solutions are a mainstay in modern data centers. As AI workloads scale horizontally across servers and GPUs, high-performance networking becomes essential to avoid bottlenecks.

9. Cisco Systems (CSCO)

What They Provide:

- Enterprise Networking & Data Center Solutions: Switches, routers, and server hardware (UCS).

- AI Partnerships: Working with companies like Nvidia to offer integrated AI-ready infrastructure.

Why It Matters:

Though historically enterprise-focused, Cisco maintains a strong presence in large data centers. As demand for AI-ready networks surges, the company’s hardware and software innovations remain critical for many hyperscaler use cases.

10. Pure Storage (PSTG)

What They Provide:

- All-Flash Storage Arrays: Low-latency, high-throughput storage solutions.

- AI Reference Architectures (AIRI): Developed in partnership with Nvidia for AI workloads.

Why It Matters:

In AI training and inference, data throughput can be a major bottleneck. Pure Storage’s flash-based solutions alleviate these constraints, ensuring that GPUs and other accelerators aren’t idle waiting for data.

Other Notable Mentions

- TSMC (TSM): The world’s largest semiconductor foundry, manufacturing advanced chips for Nvidia, AMD, Apple, and more.

- Samsung Electronics (KRX: 005930): A leader in DRAM, NAND flash, and system-on-chips for mobile and AI applications.

- SK hynix (KRX: 000660): Another major DRAM and NAND flash supplier.

- Lattice Semiconductor (LSCC): Known for low-power FPGAs used in edge AI, embedded computing, and data center functions.

Key Factors to Watch

- Supply Chain Dynamics:

Semiconductor supply can be cyclical, and disruptions can impact everything from pricing to availability. - R&D Investment:

AI demands constantly evolving hardware. Companies investing in next-gen chips (GPUs, DPUs, accelerators) will likely have an edge. - Market Competition:

Giant cloud providers can negotiate aggressively, putting pricing pressure on suppliers. Having diversified product lines can mitigate risk. - Technological Roadmaps:

Performance and power efficiency are paramount. Watch for innovations like advanced packaging, photonics, and new memory technologies that could shift market dynamics. - Balancing Custom vs. Standard Solutions:

Hyperscalers often seek customized hardware for their unique workloads, but suppliers must also cater to a broader market to stay profitable and resilient.

Final Thoughts

The hyperscaler hardware ecosystem is both diverse and highly specialized. From GPUs that drive AI training to networking switches that connect thousands of servers, these components form the backbone of modern cloud data centers. As AI and big data applications become ever more integral to business operations, the demand for cutting-edge, efficient hardware will only increase.

Whether you’re an enthusiast tracking technology trends or an investor exploring the AI and cloud infrastructure landscape, understanding the core players and the roles they play is crucial. With rapid advancements on the horizon—including next-gen chip designs, quantum computing, and new memory solutions—this ecosystem will remain one of the most dynamic and influential in the entire tech sector.

Disclaimer: This blog post is for informational purposes only and does not constitute financial advice. Always perform your own research or consult a professional before making investment decisions.

Analysis assisted by OpenAI model o1